Sleep to educate

##Hypnopaedia

Well, wouldn’t it be good if you could just fall asleep and wake up knowing fluent French, or Spanish? Hypnopaedia is just that, the ability to educate the mind while sleeping. Looking at authors of science fiction such as Aldous Huxley you can see the ‘technofuture utopia’ that we’d all be wondering if one day we ended up in with the ability to educate yourself while you sleep. In the book a Brave New World the ability to educate people’s minds while they sleep is used to condition people into staying within their designated part of society. The main antagonist ends up hanging himself, possibly a sign that maybe this shouldn’t be the way forward with this method of education. The novel, A Clockwork Orange, also sees sleep-learning techniques used upon the main character, however it is to no avail.

There was initial optimism about the ability to learn while you sleep and research in 1958 by Dement and Edware Wolpert pointed to external stimuli being able to be interpreted by the mind and incorporated into the dreams of participants, with one subject being squirted with water reported a dream of a leaky roof after being awoken afterwards. Conduit & Coleman in 1998 found that between 10-50% of people report external stimuli being incorporated into their dreams.

While incorporating external stimuli is almost seen as a lower state of function, actually educating the mind during sleep is another layer since the ability to desseminate information to later recall it requires greater mind function. A classic example of incorporating external stimuli within a dream would be where a sleeper hears their alarm in their dreams just before waking. A study by Charles W. Simon and William H. Simmons 1956 found that recalling material when awake was low unless alpha wave activity was observed, which also meant that the subject was going to awaken.

##Pavlov and his dogs

The ability to teach the body to respond to a stimulus can be found from Pavlov’s famous experiment involving dogs, a bell, a bowl of dog food and a lot of dog slobber. Pavlov conditioned the dogs to salivate if he conditioned them to associate a bell with being fed, thus if he rang the bell but didn’t feed them the dogs would still salivate. However this required a positive physiological response from the body which in Pavlov’s case was the food. However in 2012, Anat Arzi of the Weizmann Institute of Science in Israel used classical conditioning to teach fifty five participants to associate odors with sounds as they slept. Anat Arzi is quoted as saying “There will be clear limits on what we can learn in sleep, but I speculate that they will be beyond what we have demonstrated.”

##Directing Dreams

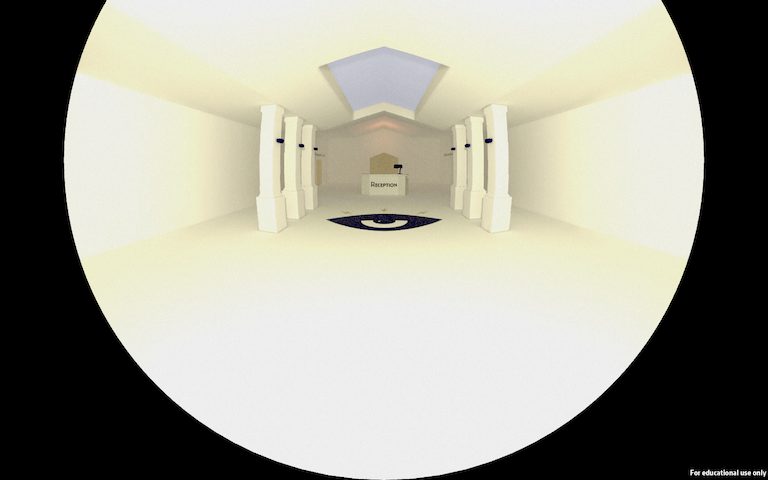

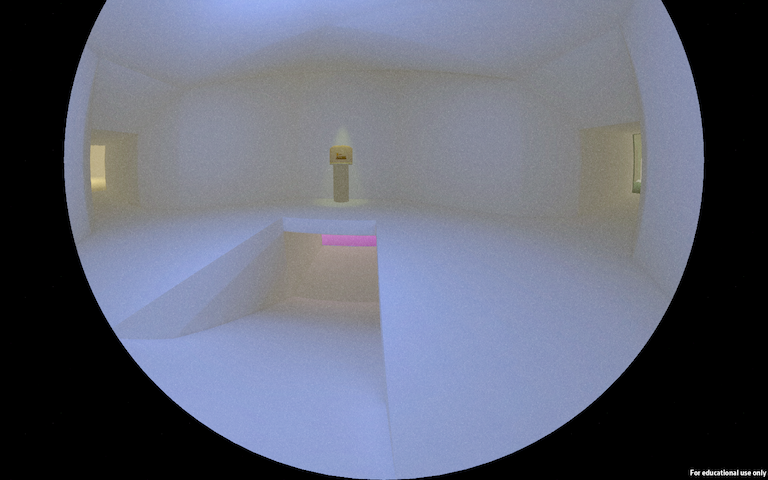

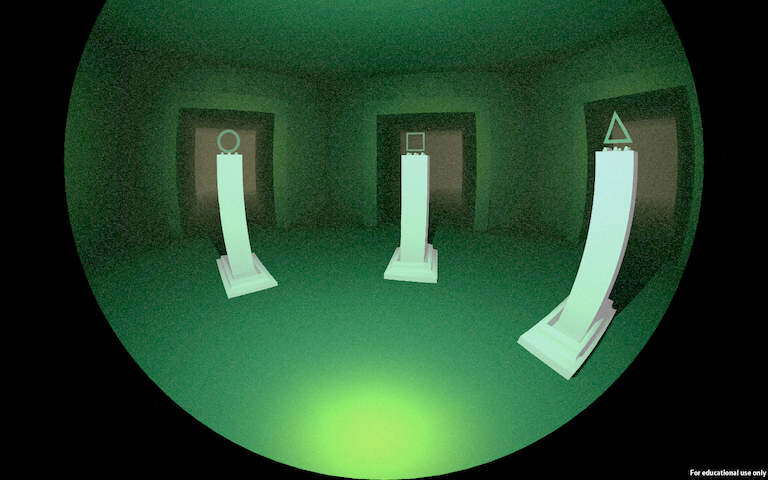

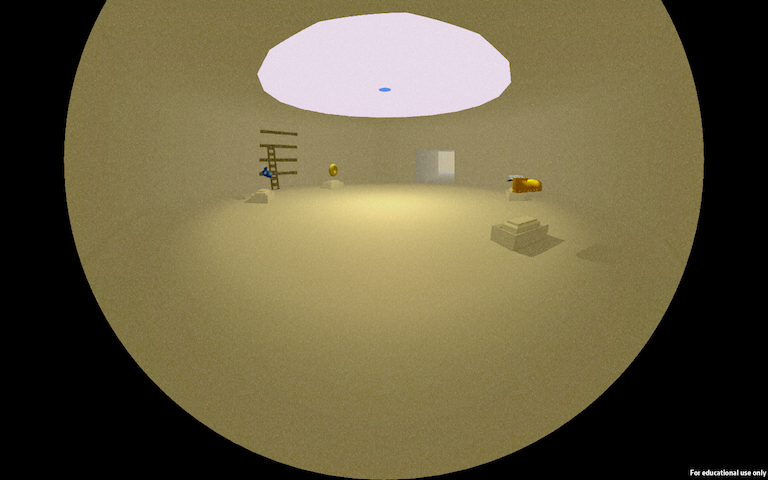

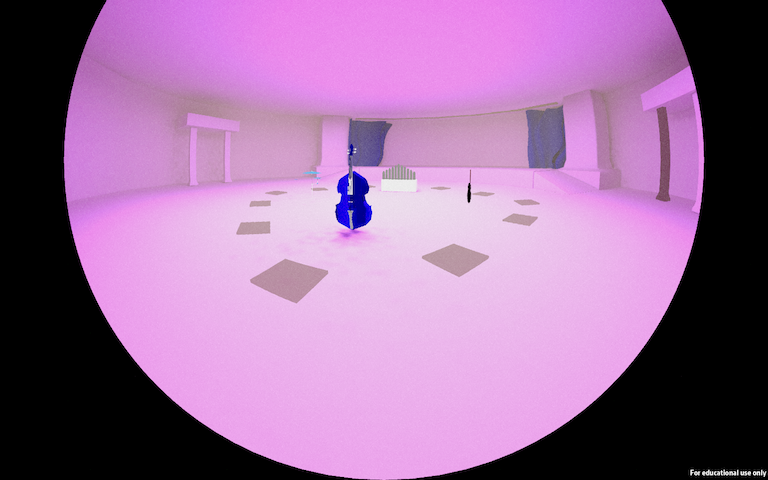

Luke Jerram, a digital artist, has worked on projects involving this ability to enhance and alter the dreams of participants in his installations - a notable installation being Dream Director which aims to raise questions about the rules of interaction and boundaries of science and art. The participants within the installation are allocated sleep pods, each one having specific sounds that are used to try to alter their dreams - during the day the public are invited to log their own dreams or view the logs of the participants dreams. While Jerram aimed to simply mold the dream around an external stimuli in the same fashion as Dement and Edware Wolpert in 1958, we would like to further push them into being able to recall the information that we pass to them during their sleep.